Abstract

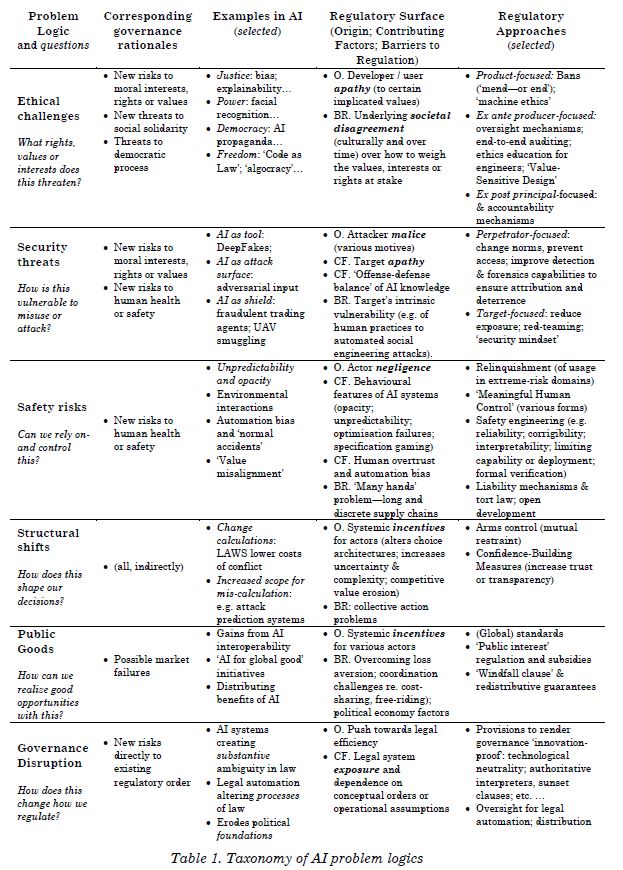

How do we regulate a changing technology, with changing uses, in a changing world? This chapter argues that while existing (inter)national AI governance approaches are important, they are often siloed. Technology-centric approaches focus on individual AI applications; law-centric approaches emphasize AI’s effects on pre-existing legal fields or doctrines. This chapter argues that to foster a more systematic, functional and effective AI regulatory ecosystem, policy actors should instead complement these approaches with a regulatory perspective that emphasizes how, when, and why AI applications enable patterns of ‘sociotechnical change’. Drawing on theories from the emerging field of ‘TechLaw’, it explores how this perspective can provide informed, more nuanced, and actionable perspectives on AI regulation. A focus on sociotechnical change can help analyse when and why AI applications actually do create a meaningful rationale for new regulation—and how they are consequently best approached as targets for regulatory intervention, considering not just the technology, but also six distinct ‘problem logics’ that appear around AI issues across domains. The chapter concludes by briefly reviewing concrete institutional and regulatory actions that can draw on this approach in order to improve the regulatory triage, tailoring, timing & responsiveness, and design of AI policy.